Last year my colleagues and I had the pleasure to spend 2 days with @hamvocke and @diegopeleteiro from @thoughtworks reviewing the platform we created. One essential part of our discussions was about CI/CD described like this: “think about continuous delivery as a journey. Imagine every git push lands on production. This is your target, this is what your CD should enable.”

Even if (or maybe because) this thought scared the hell out of us, it became our vision for the next few months because we saw great opportunities we would gain if we would be able to work this way.

Let me describe the context we were working:

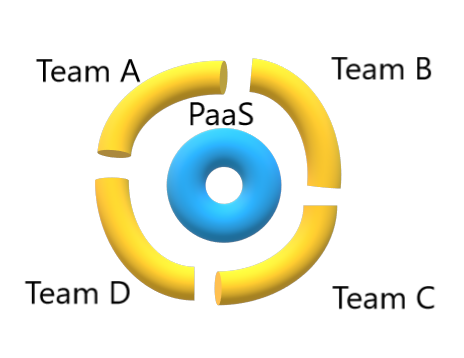

- Four business teams, 100% self-organized, owning 1…n Self-contained Systems, creating microservices running as Docker containers orchestrated with Kubernetes, hosted on AWS.

- Boundaries (as in Domain Driven Design) defined based on the business we were in.

- Each team having full ownership and full accountability for their part of business (represented by the SCS).

- Basic heuristics regarding source code organisation: “share nothing” about business logic, “share everything” about utility functions (in OSS manner), about experiences you made, about the lessons you learned, about the errors you made.

- Ensuring the code quality and the software quality is 100% team responsibility.

- You build it, you run it.

- One Platform-as-a-service team to enable this business teams to deliver features fast.

- Gitlab as VS, Jenkins as build server, Nexus as package repository

- Trunk-based development, no cherry picking, “roll fast forward” over roll back.

The architecture we have chosen was meant to support our organisation: independent teams able to work and deliver features fast and independently. They should decide themselves when and what they deploy. In order to achieve this we defined a few rules regarding inter-system communication. The most important ones are:

- Event-driven Architecture: no synchronous communication only asynchronous via the Domain Event Bus

- Non-blocking systems: every SCS must remain (reduced) functional even if all the other systems are down

We had only a couple of exceptions for these rules. As an example: authentication doesn’t really make sense in asynchronous manner.

Working in self-organized, independent teams is a really cool thing. But

with great power there must also come great responsibility

Uncle Ben to his nephew

Even though we set some guards regarding the overall architecture, the teams still had the ownership for the internal architecture decisions. As at the beginning we didn’t have continuous delivery in place every team was alone responsible for deploying his systems. Due the missing automation we were not only predestined to make human errors but we were also blind for the couplings between our services. (And we spent of course a lot of time doing stuff manually instead of letting Jenkins or Gitlab or some other tool doing this stuff for us 🤔 )

One example: every one of our systems had at least one React App and a GraphQL API as the main communication (read/write/subscribe) channel. One of the best things about GraphQL is the possibility to include the GraphQL-schema in the react App and this way having the API Interface definition included in the client application.

Is this not cool? It can be. Or it can lead to some very smelly behavior, to a real tight coupling and to inability to deploy the App and the API independently. And just like my friend @etiennedi says: “If two services cannot be deployed independently they aren’t two services!”

This was the first lesson we have learned on this journey: If you don’t have a CD pipeline you will most probably hide the flaws of your design.

One can surely ask “what is the problem with manual deployment?” – nothing, if you have only a few services to handle, if every one in your team knows about these couplings and dependencies and is able to execute the very precise deployment steps to minimize the downtime. But otherwise? This method doesn’t scale, this method is not very professional – and the biggest problem: this method ignores the possibilities offered by Kubernetes to safely roll out, take down, or scale everything what you have built.

Having an automated, standardized CD pipeline as described at the beginning – with the goal that every commit will land on production in a few seconds – having this in place forces everyone to think about the consequences of his/hers commit, to write backwards compatible code, to become a more considered developer.

to be continued …